The Force Awakens: Hybrid Blockchain Runtime Monitors (Solarkraft #4)

» This guest post by Andrey Kuprianov first appeared on his blog.

Solarkraft has been developed in collaboration by Igor Konnov, Jure Kukovec, Andrey Kuprianov and Thomas Pani.

This is the fourth in a series of blog posts introducing Solarkraft, a TLA+-based runtime monitoring solution for Soroban smart contracts. The first post, “A New Hope – Why Smart Contract Bugs Matter and How Runtime Monitoring Saves the Day” gives an overview of smart contracts, explains how traditional security fails to address major challenges in securing crypto assets, and introduces runtime monitoring as a solution. The second post, “Guardians of the Blockchain: Small and Modular Runtime Monitors in TLA+ for Soroban Smart Contracts” introduces the basic language of Solarkraft monitors. The third post, “How to Run Solarkraft” gives an overview of the various features of Solarkraft, and explains how to use each one, step-by-step.

While the previous posts explain the current state of the project, in this one we take one step further, and explore the directions in which we plan to evolve blockchain runtime monitoring with Solarkraft. Throughout the post we are using the same timelock contract from soroban-examples that was used in Part 2: “Guardians of the Blockchain”; please explore at least this post first to acquire the necessary context.

Blockchain Runtime Monitors

Runtime monitoring, also known as runtime verification, is a well-established field, where many practical approaches have been devised and applied successfully. Based on this heritage, we proposed the first version of a Web3 runtime monitoring system for the Stellar blockchain in Part 2: “Guardians of the Blockchain”. Our system is based on the TLA+ specification language, a well-established formalism for specifying and verifying distributed systems.

Taking a step back from the concrete solution, let’s try to answer the more abstract question: What do we want to achieve with runtime monitors in blockchains? As runtime monitors are eventually going to be deployed and executed on the live blockchain, they should satisfy the following requirements:

- Prevent from safety violations (safety): bad things, such as your token balance being stolen, should not happen. This is the primary goal of runtime monitors: react preventively, and abort unwanted executions.

- Detect liveness violations (liveness): good things should be able to happen! E.g. you, as an account owner, should be able to withdraw your own balance. If a legitimate transaction keeps reverting, that’s also a bug, not less severe than someone stealing your tokens.

- Detect unexpected behaviors (completeness): same as code, specs are not perfect. If a spec author overlooked some behaviors, and they manifest themselves on the live blockchain, this may mean anything: from simple spec incompleteness, to an exploit being executed. Monitors should be configurable to either issue a simple warning, or to prevent such behaviors altogether.

The problem we’ve observed with the previously employed approaches to formal specification is that the specs of what the method should do can easily be much larger than the actual implementation. So we would like to add to the above the following soft requirement:

- Specify behaviors compactly and independently (compactness and modularity): it is usually the case that a smart contract encompasses a lot of various aspects (e.g. authentication, authorization, storage management, math computations), and is written/employed/reasoned about by various roles (e.g. smart contract developer, mathematician, architect, UI developer). All of those roles should be able to specify various aspects of the smart contract behavior as easily and as independently as possible.

So monitors should be able to specify both safety and liveness properties, be complete wrt. the current and future system behaviors, and, preferably, also be compact and modular. For that we propose a conceptual separation of monitors into direct monitors (those reasoning from cause to effect), and reverse monitors (those going from effect to cause). We can combine the two together in what we call hybrid monitors.

Direct Monitors

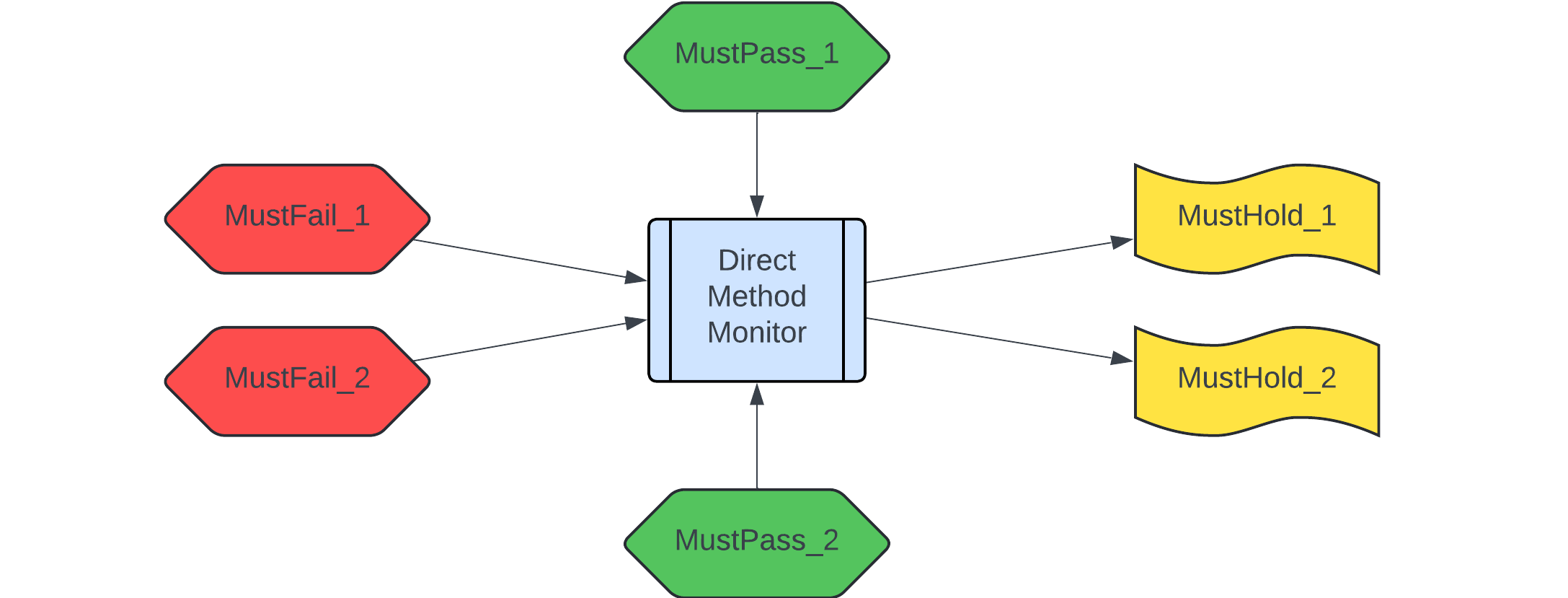

Here we reason from the cause (method invocation) to the effect, but apply a structure which closely mimics, in formal semantics, what we expect to see when we program smart contracts. The essence of the structure is in the picture below:

In direct monitors, we distinguish three kinds of conditions:

MustFail_iis a condition under which the method is expected to fail. If any of those conditions hold, the monitor activates, and checks that the method does indeed fail;MustPass_iis a condition, under which the method is expected to succeed, provided that none of theMustFail_iconditions hold. EachMustPass_icondition represents a separate happy path in the method invocation;MustHold_iis a condition that should hold after the method invocation is successful (e.g. the tokens should be transferred). Unlike the previous two categories, which reason only about the state of the system before the method invocation, these properties may reference both the post-method state, and the pre-method state. All ofMustHold_ishould hold if the method is executed successfully.

In the above, Must<Fail|Pass|Hold> is a prefix, which tells the monitor system how to interpret this predicate. The complete pattern for predicate names with these prefixes is as follows:

Must<Fail|Pass|Hold>_<Method>_<ConditionDescription>

All predicates which refer to the same <Method> will be grouped, to create together one method monitor. Interpreted formally, the monitor should do the following when <Method> is invoked:

- If any of

MustFail_iconditions hold, check that method invocation reverts (otherwise, issue a warning / revert if configured to do so) - If none of

MustFail_iconditions hold, but method invocation reverted, issue a warning (incomplete spec) - If none of

MustFail_ihold, and one ofMustPass_iconditions hold, check that method invocation succeeds (otherwise, issue a warning) - If none of

MustFail_ihold, and none ofMustPass_iconditions hold, but method invocation succeeded, issue a warning of an incomplete spec (or revert if configured to do so) - If method invocation succeeds, check that all of

MustHold_iconditions hold on the pre- and post-states of the method invocation (otherwise, issue a warning / revert if configured to do so)

Notice that above we apply or as default connector for preconditions (MustFail_i / MustPass_i), and we apply and as default connector for effects (MustHold_i). Thus, you may split preconditions/effects into separate predicates at your convenience, avoiding complicated logical structure inside predicates themselves.

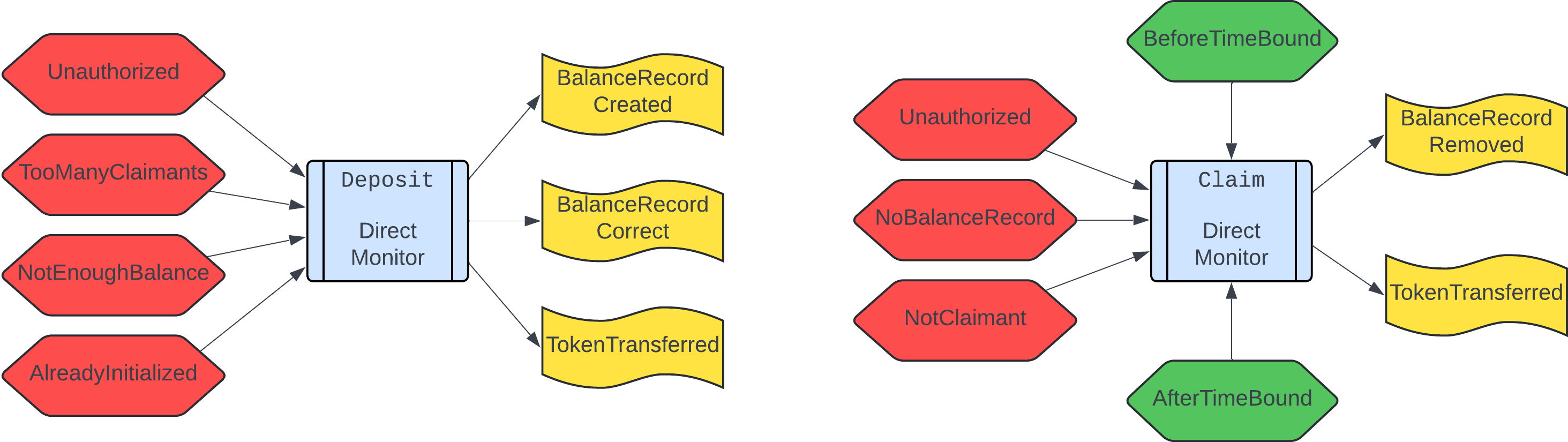

Direct monitors for the Timelock contract

Having outlined the general structure of direct monitors, let’s apply it to the Timelock contract. Direct monitors for Timelock’s deposit and claim methods can be found in deposit.tla and claim.tla respectively; below we depict only the structure of these monitor specifications (we omit Must<Fail|Pass|Hold> as well as the method names for clarity).

As can be seen, a direct method monitor is decomposed into a collection of independent and small monitors, i.e. we did achieve our (soft) goal of compactness and modularity. Safety and liveness goals also seem to be satisfied:

- Safety: Timelock’s direct monitors guarantee numerous safety properties. A safety property is usually ensured by either MustFail, or MustHold, or a combination of both conditions. For example:

- The property “only the approved claimant may claim the deposit” is ensured by the NonClaimant sub-monitor;

- The property “the Timelock contract receives the deposited funds from the claimant” is ensured by the combination of NotEnoughBalance and TokenTransferred sub-monitors.

- Liveness: Timelock’s liveness properties are guaranteed by the MustPass conditions:

- Implicit in case of

deposit(whatever doesn’t fail, should succeed); - Explicit in case of

claim: a claim happening before the time bound, when its kind isBefore, should succeed due to BeforeTimeBound (provided all other conditions are met); similarly, a claim happening after the time bound, when its kind isAfter, should succeed due to AfterTimeBound.

- Implicit in case of

Can the described approach of direct monitors be considered satisfactory? Please stop to think about it for a sec, before opening our answer below.

Are direct monitors sufficient?

You may have guessed the answer: we believe NO! And here is an (incomplete) list of reasons why:

- A method may have a side effect, which was overlooked by the spec author. E.g. a boolean flag gets set, which allows the redirection of funds to another account in a different method.

- Code evolves, but the spec stays as is; as a result a side effect, like the one above, is introduced unintentionally, with the stale spec not accounting for it.

- The same internal state component is modified by multiple methods, in different ways. The specification of how the component should be updated is scattered across multiple places, and loopholes may easily creep in.

- An invariant which is preserved by the method of this contract, is violated by a method from another contract. As no specs are written or monitored for this other contract, no violation is detected.

Reverse Monitors

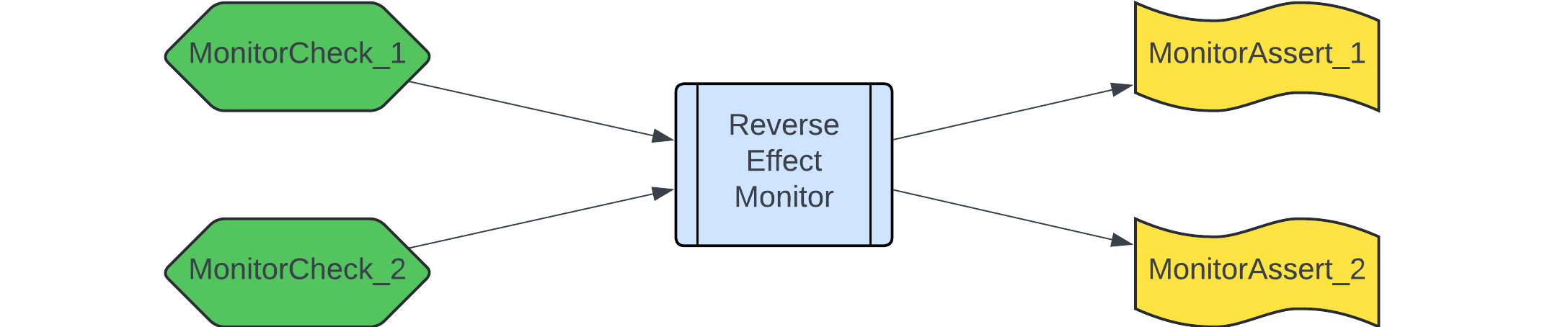

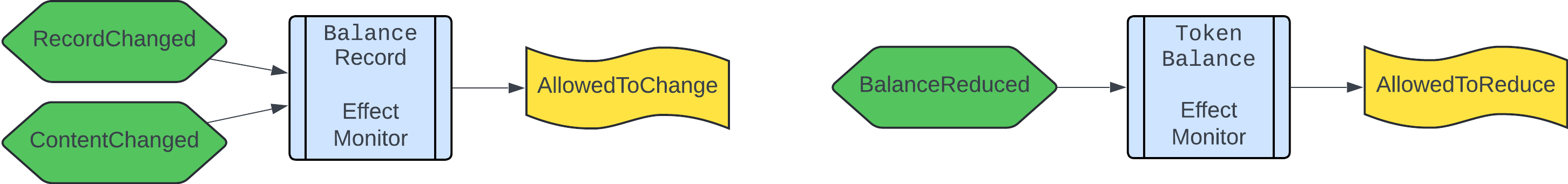

With reverse reasoning we will try to patch the loopholes that were left by direct monitor specs above. To do so, we start with an effect (state was modified), and go back to its cause (what should have happened taking the effect into account). Here is the corresponding picture which puts a bit of structure into the reverse reasoning.

In reverse monitors, we distinguish two kinds of conditions:

MonitorCheck_iis a condition over past and next state variables, which activates the monitor. If any of those conditions hold, the monitor is activated. These conditions should not be confused with preconditionsMustFail/MustPassfrom direct monitors: unlike those, aMonitorCheckcan express conditions like “a state variable has changed its value.”MonitorAssert_iis a condition over past and next state variables, which the monitors requires . All ofMonitorAssert_ishould hold if the transaction is successful. These conditions are the same asMustHoldin direct monitors, and express a post-condition.

Thus, the main difference wrt. direct monitors is how monitor activation is expressed: while direct monitors are activated by observing the cause (a method invocation with specific parameters), reverse monitors are activated by observing the effect (a change in state variables). Another difference is that Monitor<Check|Assert> conditions are method-unspecific, and can refer only to the state variables and the environment, but not to the method parameters.

In the above, Monitor<Check|Assert> is a prefix, which tells the monitor system how to interpret this predicate. The complete pattern for predicate names with these prefixes is as follows:

Monitor<Check|Assert>_<Monitor>_<ConditionDescription>

All predicates which refer to the same <Monitor> will be grouped, to create together one effect monitor. Interpreted formally, the monitor should do the following when activated:

- If any of

MonitorCheck_iconditions hold, ensure that allMonitorAssert_ihold over the past and next states (otherwise, issue a warning / revert if configured to do so)

Again, imposing a simple structure which combines triggers with or, but effects with and allows you to avoid cumbersome logic inside monitor predicates.

Let’s take a look at how reverse monitor specs help us to patch the loopholes described above:

- Overlooked side effects: a reverse monitor will detect all changes to the monitored state, no matter where they originate.

- Side effects introduced during system evolution: same as above. Additionally, if an effect monitor is configured to revert in case of unexpected effects taking place, the developers will be forced to keep the spec in sync with the implementation.

- Inconsistent / spread-out specs: An effect monitor may describe all effects that may happen wrt. a particular state component in one place. As this monitor will be triggered when any of the methods that modify the state is executed, this also brings us considerable savings in terms of spec size/complexity, as similar effects can be grouped together.

- Unrestricted access from other contracts/methods: as in 1. and 2., it doesn’t matter again where the modification comes from: if the state component we monitor is being changed, the monitor will detect it.

Reverse monitors for the Timelock contract

We apply the general structure of reverse monitors to the Timelock contract; below we depict only the structure of Timelock’s reverse monitors (we omit Monitor<Check|Assert> as well as the monitor names for clarity).

Timelock’s reverse monitors are not specific to the smart contract methods, but rather formulate two important completeness conditions:

- “Timelock’s balance record is allowed to be updated only by

depositorclaimmethods”: see the complete specification in file balance_record.tla. - “Timelock’s contract balance in the deposited token is allowed to be reduced only by

claimmethod”: see the complete specification in file token_balance.tla.

Having these conditions in place does indeed make the combination of Timelock’s direct and reverse monitors, which we call a hybrid monitor, complete both wrt. deficiencies in direct monitor specifications, and wrt. future code changes.

Updated on 26.06.2024: replaced Monitor<Trigger|Effect> with Monitor<Check|Assert>

Done reading? Then proceed to the final post of this blog post series, “The Rise of Model Checker: Verifying Blockchain Monitors In and Near Realtime”, where we address these important questions: “How to verify monitor specs, and what is the verification complexity?”, as well as “How to practically check them on the live blockchain?”

Development of Solarkraft was supported by the Stellar Development Foundation with a generous Activation Award from the Stellar Community Fund of 50,000 USD in XLM.

If you want to pick up my brain for a few hours, drop me an email. After that, we can switch to a messenger of your choice (e.g., Signal, Telegram, or others) or have a call. For short consultations, I accept payments via Stripe. I also consider longer-term engagements (from weeks to months), which would require a contract. You can see my portfolio at konnov.phd. I am based in Austria (CET/CEST) and have experience working with clients from the US, UK, and EU.

The comments below are powered by GitHub discussions via Giscus. To leave a comment, either authorize Giscus, or leave a comment in the GitHub discussions directly.